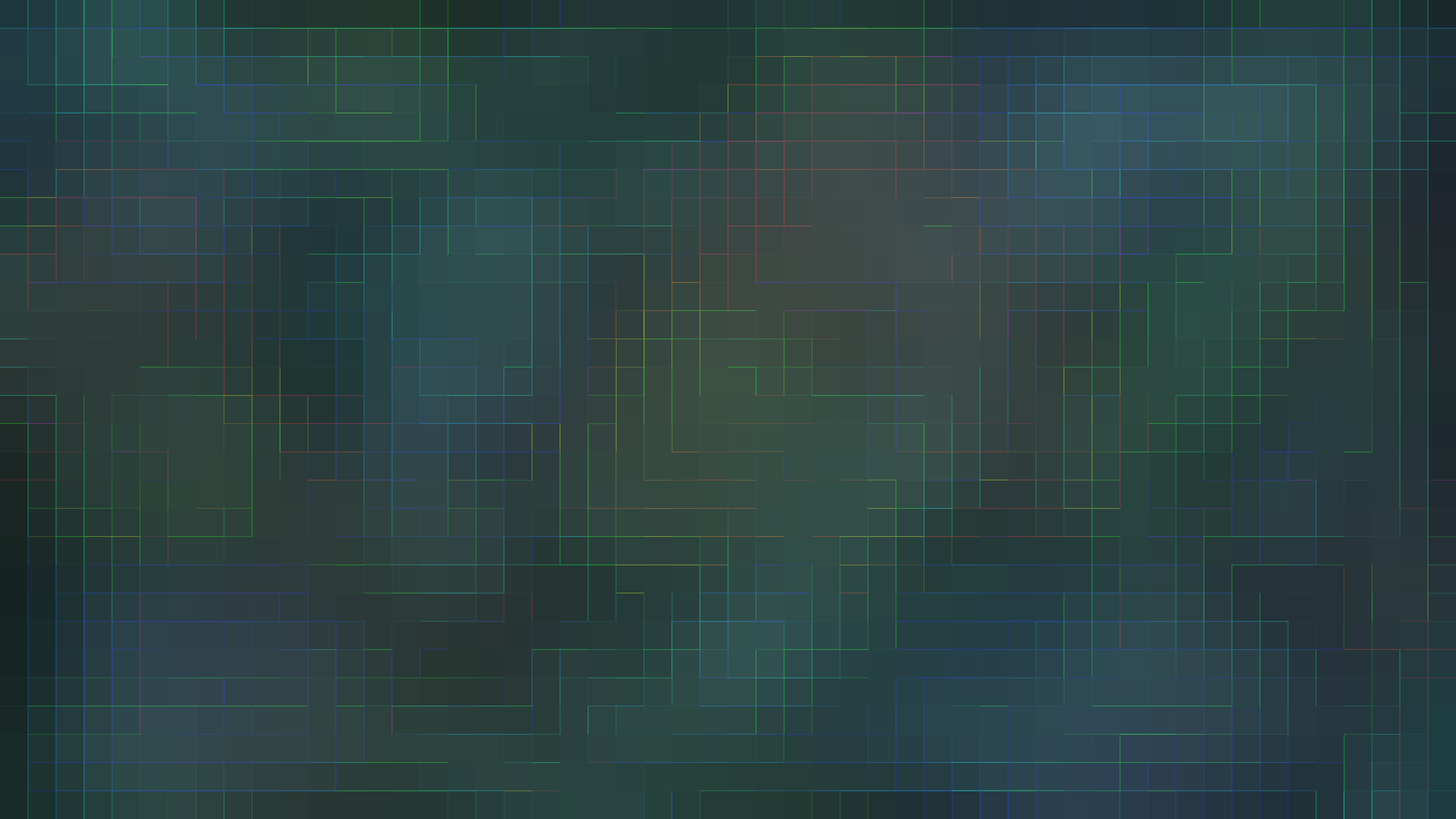

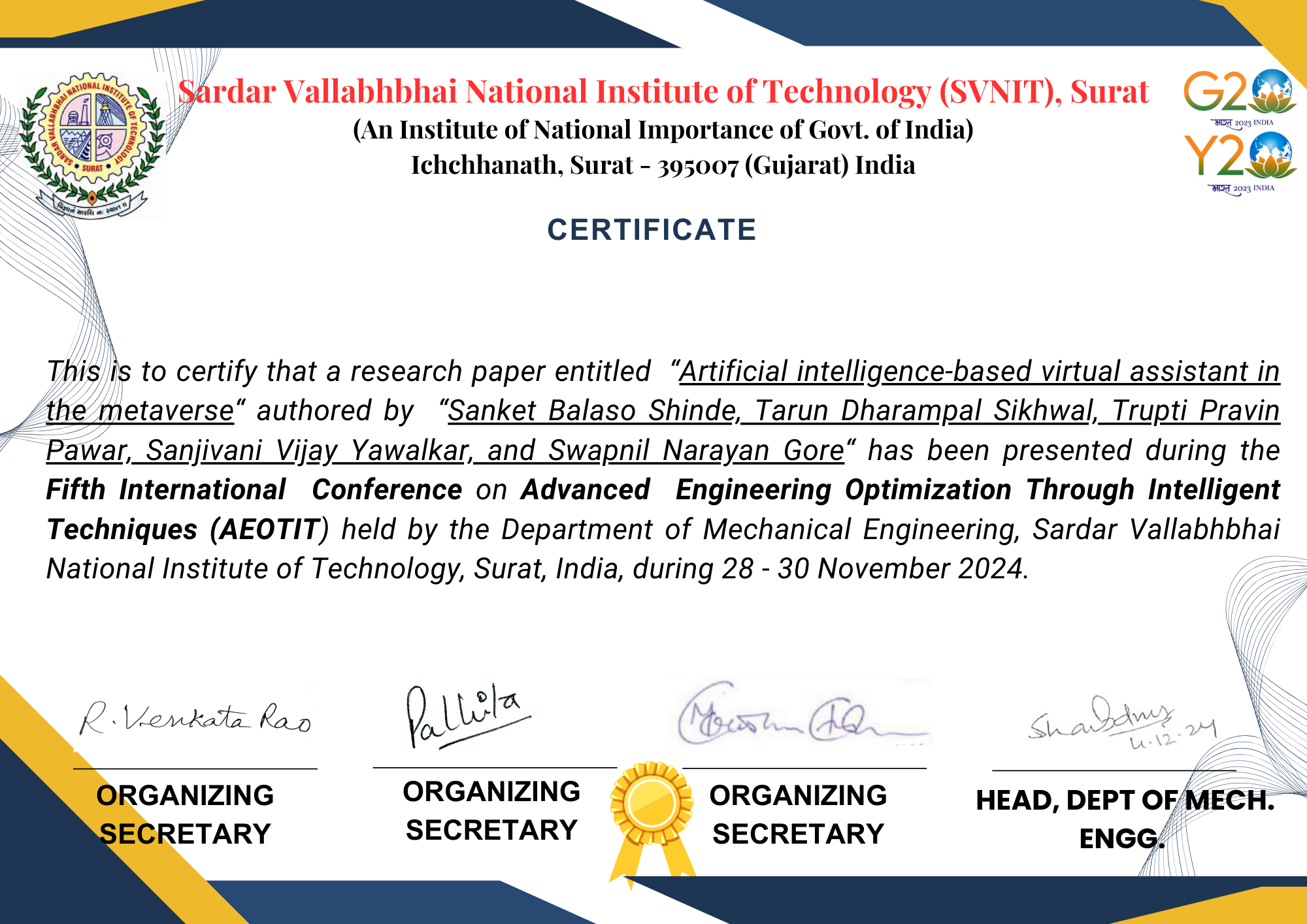

Bridging AI Assistants with Multiplayer Metaverse Experiences

Focus Area

Advanced AI, AR/VR & Metaverse

Required Equipment

Meta Quest 2 / Pro

Team Lead

Tarun Sikhwal

Team Members: Sanket Shinde, Swapnil Gore, Trupti Pawar, Sanjivani Yawalkar.

The project connects users represented by avatars, accompanied by a personal AI-powered virtual assistant. Leveraging machine learning and Metaverse technology, the assistant provides real-time support and personalized guidance within the virtual world. A key innovation is the multiplayer interaction functionality, enabling users to communicate via audio in the presence of their AI Assistants, fostering community engagement and contextual knowledge sharing.

Current VR platforms lack personalized assistance. Users struggle with complex navigation and resource management, limiting the potential for education and social interaction.

Integrating a 3D AI Assistant for every user. These assistants use NLP to respond to natural language commands while following the user intelligently using NavMesh agents.

.jpg)

.png)

.png)

.png)

.png)

3D Environment development and real-time physics rendering.

Handles real-time multiplayer synchronization and voice chat.

Full immersion tracking for head and hand movement.